环境信息

本次环境信息如下

| 服务 | 版本 |

|---|---|

| Kubernetes | 1.23.5 |

| Ceph | nautilus 14.2.22 |

[root@k8s-01 ~]# kubectl get pod -n ceph

NAME READY STATUS RESTARTS AGE

csi-rbdplugin-dzmdk 3/3 Running 3 (15h ago) 3d1h

csi-rbdplugin-h8j2v 3/3 Running 3 (111s ago) 3d1h

csi-rbdplugin-h9k95 3/3 Running 3 (15h ago) 3d1h

csi-rbdplugin-hvq58 3/3 Running 3 (22h ago) 3d1h

csi-rbdplugin-ngktg 3/3 Running 3 (15h ago) 3d1h

csi-rbdplugin-provisioner-7557ccdb7c-4qczh 7/7 Running 7 (22h ago) 3d1h

csi-rbdplugin-provisioner-7557ccdb7c-5tx4r 7/7 Running 9 (15h ago) 3d1h

csi-rbdplugin-provisioner-7557ccdb7c-t8877 7/7 Running 0 15h

[root@k8s-01 ~]# kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

csi-rbd-sc rbd.csi.ceph.com Delete Immediate true 3d1h

[root@ceph-01 ~]# ceph -s

cluster:

id: c8ae7537-8693-40df-8943-733f82049642

health: HEALTH_OK

services:

mon: 3 daemons, quorum ceph-01,ceph-02,ceph-03 (age 15h)

mgr: ceph-02(active, since 15h), standbys: ceph-03, ceph-01

mds: cephfs-abcdocker:1 cephfs:1 {cephfs-abcdocker:0=ceph-02=up:active,cephfs:0=ceph-03=up:active} 1 up:standby

osd: 4 osds: 4 up (since 15h), 4 in (since 3w)

rgw: 2 daemons active (ceph-01, ceph-02)

task status:

data:

pools: 13 pools, 656 pgs

objects: 3.25k objects, 11 GiB

usage: 36 GiB used, 144 GiB / 180 GiB avail

pgs: 656 active+cleanPrometheus

Prometheus K8s安装之前的文章已经写了,此处就不详细介绍了。 我这里是使用ceph rbd进行持久化存储,storageclass已经创建完毕

创建PVC持久化目录

[root@k8s-01 prometheus]# kubectl create ns prometheus

namespace/prometheus created

cat >prometheus-pvc.yaml<<EOF

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: prometheus-data-db #pvc名称,这里不建议修改

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 50Gi #创建pvc大小

storageClassName: csi-rbd-sc #这里是我的storageclass,请根据自己的实际情况修改

EOF

#创建pvc

[root@k8s-01 prometheus]# kubectl apply -f prometheus-pvc.yaml

persistentvolumeclaim/prometheus-data-db created

#查看pvc创建状况

[root@k8s-01 prometheus]# kubectl get pvc -n prometheus

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

prometheus-data-db Bound pvc-4c5cb159-2f59-44d8-840d-6ee6ceeb1f4c 50Gi RWO csi-rbd-sc 8s安装Prometheus

Prometheus在之前的文章就已经介绍过了,本地只是将nfs存储引擎修改为ceph。并且deployment随着时间的变化进行更新维护

首先需要配置configmap

[root@k8s-01 prometheus]# cat prometheus.configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-config

namespace: prometheus

data:

prometheus.yml: |

global:

scrape_interval: 15s

scrape_timeout: 15s

scrape_configs:

- job_name: 'prometheus'

static_configs:

- targets: ['localhost:9090']

- job_name: 'kubernetes-node'

kubernetes_sd_configs:

- role: node

relabel_configs:

- source_labels: [__address__]

regex: '(.*):10250'

replacement: '${1}:9100'

target_label: __address__

action: replace

- job_name: 'kubernetes-cadvisor'

kubernetes_sd_configs:

- role: node

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- target_label: __address__

replacement: kubernetes.default.svc:443

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __metrics_path__

replacement: /api/v1/nodes/${1}/proxy/metrics/cadvisor

- job_name: 'kubernetes-apiservers'

kubernetes_sd_configs:

- role: endpoints

scheme: https

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

relabel_configs:

- source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name]

action: keep

regex: default;kubernetes;https

- job_name: 'kubernetes-service-endpoints'

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape]

action: keep

regex: true

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scheme]

action: replace

target_label: __scheme__

regex: (https?)

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels: [__address__, __meta_kubernetes_service_annotation_prometheus_io_port]

action: replace

target_label: __address__

regex: ([^:]+)(?::d+)?;(d+)

replacement: $1:$2

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_service_name]

action: replace

target_label: kubernetes_name

- job_name: kubernetes-nodes-cadvisor

scrape_interval: 10s

scrape_timeout: 10s

scheme: https # remove if you want to scrape metrics on insecure port

tls_config:

ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token

kubernetes_sd_configs:

- role: node

relabel_configs:

- action: labelmap

regex: __meta_kubernetes_node_label_(.+)

- target_label: __address__

replacement: kubernetes.default.svc:443

- source_labels: [__meta_kubernetes_node_name]

regex: (.+)

target_label: __metrics_path__

replacement: /api/v1/nodes/${1}/proxy/metrics/cadvisor

metric_relabel_configs:

- action: replace

source_labels: [id]

regex: '^/machine.slice/machine-rkt\x2d([^\]+)\.+/([^/]+).service$'

target_label: rkt_container_name

replacement: '${2}-${1}'

- action: replace

source_labels: [id]

regex: '^/system.slice/(.+).service$'

target_label: systemd_service_name

replacement: '${1}'

- job_name: kube-state-metrics

static_configs:

- targets: ['kube-state-metrics.prometheus.svc.cluster.local:8080'] #svc地址,如果在其它命名空间请将prometheus改为其它空间接下来配置rbac授权文件,否则Deployment会没有权限创建pod

[root@k8s-01 prometheus]# cat prometheus-rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: prometheus

namespace: prometheus

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: prometheus

rules:

- apiGroups:

- ""

resources:

- nodes

- services

- endpoints

- pods

- nodes/proxy

verbs:

- get

- list

- watch

- apiGroups:

- ""

resources:

- configmaps

- nodes/metrics

verbs:

- get

- nonResourceURLs:

- /metrics

verbs:

- get

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: prometheus

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: prometheus

subjects:

- kind: ServiceAccount

name: prometheus

namespace: prometheus创建prometheus容器,以Deployment方式运行

[root@k8s-01 prometheus]# cat prometheus.deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: prometheus

namespace: prometheus

labels:

app: prometheus

spec:

selector:

matchLabels:

app: prometheus

template:

metadata:

labels:

app: prometheus

spec:

serviceAccountName: prometheus

containers:

- image: prom/prometheus:latest

name: prometheus

command:

- "/bin/prometheus"

args:

- "--config.file=/etc/prometheus/prometheus.yml"

- "--storage.tsdb.path=/prometheus"

- "--storage.tsdb.retention=30d"

- "--web.enable-admin-api" # 控制对admin HTTP API的访问,其中包括删除时间序列等功能

- "--web.enable-lifecycle" # 支持热更新,直接执行localhost:9090/-/reload立即生效

ports:

- containerPort: 9090

protocol: TCP

name: http

volumeMounts:

- mountPath: "/prometheus"

subPath: prometheus

name: data

- mountPath: "/etc/prometheus"

name: config-volume

resources:

requests:

cpu: 100m

memory: 512Mi

limits:

cpu: 100m

memory: 512Mi

securityContext:

runAsUser: 0

volumes:

- name: data

persistentVolumeClaim:

claimName: prometheus-data-db

- configMap:

name: prometheus-config

name: config-volume接下来我们还需要给prometheus创建pvc

我这里后面grafana和prometheus会分开,所以我使用NodePort。如果有ingress可以使用cluster

[root@k8s-01 prometheus]# cat prometheus-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: prometheus

namespace: prometheus

labels:

app: prometheus

spec:

selector:

app: prometheus

type: NodePort

ports:

- name: web

port: 9090

targetPort: http接下来在给我们K8s节点设置node-exporter

[root@k8s-01 prometheus]# cat prometheus-node.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: node-exporter

namespace: prometheus

labels:

name: node-exporter

spec:

selector:

matchLabels:

name: node-exporter

template:

metadata:

labels:

name: node-exporter

spec:

hostPID: true

hostIPC: true

hostNetwork: true

containers:

- name: node-exporter

image: prom/node-exporter:v0.16.0

ports:

- containerPort: 9100

resources:

requests:

cpu: 0.15

securityContext:

privileged: true

args:

- --path.procfs

- /host/proc

- --path.sysfs

- /host/sys

- --collector.filesystem.ignored-mount-points

- '"^/(sys|proc|dev|host|etc)($|/)"'

volumeMounts:

- name: dev

mountPath: /host/dev

- name: proc

mountPath: /host/proc

- name: sys

mountPath: /host/sys

- name: rootfs

mountPath: /rootfs

tolerations:

- key: "node-role.kubernetes.io/master"

operator: "Exists"

effect: "NoSchedule"

volumes:

- name: proc

hostPath:

path: /proc

- name: dev

hostPath:

path: /dev

- name: sys

hostPath:

path: /sys

- name: rootfs

hostPath:

path: /当我们所以配置文件都设置好后,我们直接kubectl apply -f .即可

[root@k8s-01 prometheus]# kubectl apply -f .

daemonset.apps/node-exporter created

persistentvolumeclaim/prometheus-data-db created

serviceaccount/prometheus created

clusterrole.rbac.authorization.k8s.io/prometheus created

clusterrolebinding.rbac.authorization.k8s.io/prometheus created

service/prometheus created

configmap/prometheus-config created

deployment.apps/prometheus created检查pod运行状态

[root@k8s-01 prometheus]# kubectl get all -n prometheus

NAME READY STATUS RESTARTS AGE

pod/node-exporter-5jdhv 1/1 Running 0 16s

pod/node-exporter-5pqth 1/1 Running 0 16s

pod/node-exporter-fhj49 1/1 Running 0 17s

pod/node-exporter-g7kc9 1/1 Running 0 16s

pod/node-exporter-v5znf 1/1 Running 0 16s

pod/prometheus-79f49d55b5-6qgc8 1/1 Running 0 13s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/prometheus NodePort 10.99.37.99 <none> 9090:30353/TCP 15s

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset.apps/node-exporter 5 5 5 5 5 <none> 17s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/prometheus 1/1 1 1 13s

NAME DESIRED CURRENT READY AGE

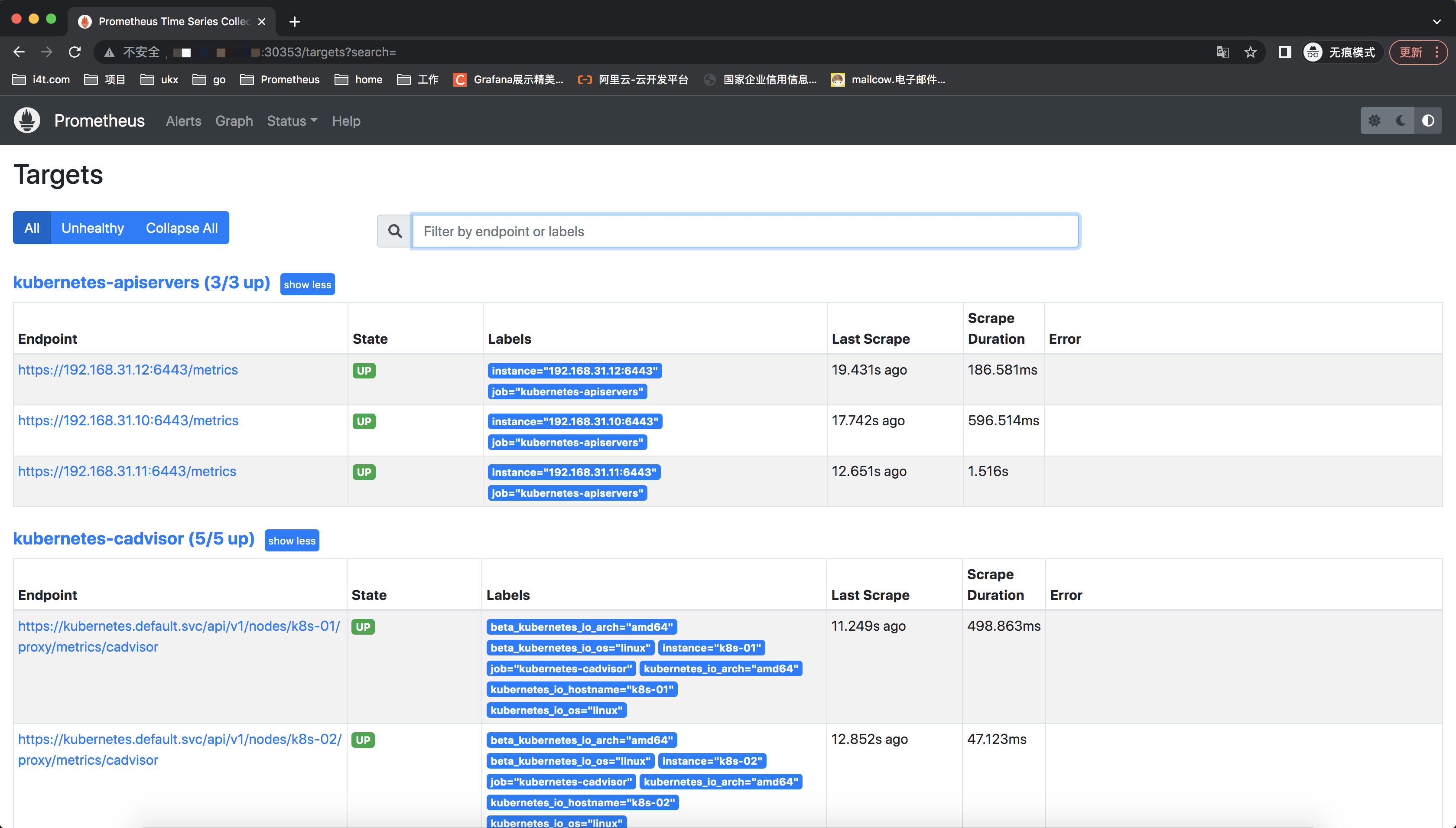

replicaset.apps/prometheus-79f49d55b5 1 1 1 13s访问prometheus测试

kube-state-metrics

Kube-State-Metrics简介

kube-state-metrics 通过监听 API Server 生成有关资源对象的状态指标,比如 Deployment 、Node 、 Pod ,需要注意的是 kube-state-metrics 只是简单的提供一个 metrics 数据,并不会存储这些指标数据,所以我们可以使用 Prometheus 来抓取这些数据然后存储,主要关注的是业务相关的一些元数据,比如 Deployment 、 Pod 、副本状态等;调度了多少个 replicas ?现在可用的有几个?多少个 Pod 是running/stopped/terminated 状态? Pod 重启了多少次?我有多少 job 在运行中

yaml文件如下

本地命名空间为prometheus

[root@k8s-01 kube-metrics]# cat kube-metrics-deployment.yaml

apiVersion: v1

automountServiceAccountToken: false

kind: ServiceAccount

metadata:

labels:

app.kubernetes.io/component: exporter

app.kubernetes.io/name: kube-state-metrics

app.kubernetes.io/version: 2.4.2

name: kube-state-metrics

namespace: prometheus

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

app.kubernetes.io/component: exporter

app.kubernetes.io/name: kube-state-metrics

app.kubernetes.io/version: 2.4.2

name: kube-state-metrics

rules:

- apiGroups:

- ""

resources:

- configmaps

- secrets

- nodes

- pods

- services

- resourcequotas

- replicationcontrollers

- limitranges

- persistentvolumeclaims

- persistentvolumes

- namespaces

- endpoints

verbs:

- list

- watch

- apiGroups:

- apps

resources:

- statefulsets

- daemonsets

- deployments

- replicasets

verbs:

- list

- watch

- apiGroups:

- batch

resources:

- cronjobs

- jobs

verbs:

- list

- watch

- apiGroups:

- autoscaling

resources:

- horizontalpodautoscalers

verbs:

- list

- watch

- apiGroups:

- authentication.k8s.io

resources:

- tokenreviews

verbs:

- create

- apiGroups:

- authorization.k8s.io

resources:

- subjectaccessreviews

verbs:

- create

- apiGroups:

- policy

resources:

- poddisruptionbudgets

verbs:

- list

- watch

- apiGroups:

- certificates.k8s.io

resources:

- certificatesigningrequests

verbs:

- list

- watch

- apiGroups:

- storage.k8s.io

resources:

- storageclasses

- volumeattachments

verbs:

- list

- watch

- apiGroups:

- admissionregistration.k8s.io

resources:

- mutatingwebhookconfigurations

- validatingwebhookconfigurations

verbs:

- list

- watch

- apiGroups:

- networking.k8s.io

resources:

- networkpolicies

- ingresses

verbs:

- list

- watch

- apiGroups:

- coordination.k8s.io

resources:

- leases

verbs:

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

app.kubernetes.io/component: exporter

app.kubernetes.io/name: kube-state-metrics

app.kubernetes.io/version: 2.4.2

name: kube-state-metrics

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: kube-state-metrics

subjects:

- kind: ServiceAccount

name: kube-state-metrics

namespace: prometheus

---

apiVersion: v1

kind: Service

metadata:

annotations:

prometheus.io/scraped: "true" # 设置能被prometheus抓取到,因为不带这个annotation prometheus-service-endpoints 不会去抓这个metrics

labels:

app.kubernetes.io/component: exporter

app.kubernetes.io/name: kube-state-metrics

app.kubernetes.io/version: 2.4.2

name: kube-state-metrics

namespace: prometheus

spec:

# clusterIP: None # 允许通过svc来进行访问

ports:

- name: http-metrics

port: 8080

targetPort: http-metrics

- name: telemetry

port: 8081

targetPort: telemetry

selector:

app.kubernetes.io/name: kube-state-metrics

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app.kubernetes.io/component: exporter

app.kubernetes.io/name: kube-state-metrics

app.kubernetes.io/version: 2.4.2

name: kube-state-metrics

namespace: prometheus

spec:

replicas: 1

selector:

matchLabels:

app.kubernetes.io/name: kube-state-metrics

template:

metadata:

labels:

app.kubernetes.io/component: exporter

app.kubernetes.io/name: kube-state-metrics

app.kubernetes.io/version: 2.4.2

spec:

nodeName: k8s-01 # 设置在k8s-master-1上运行

tolerations: # 设置能容忍在master节点运行

- key: "node-role.kubernetes.io/master"

operator: "Exists"

effect: "NoSchedule"

automountServiceAccountToken: true

containers:

# - image: k8s.gcr.io/kube-state-metrics/kube-state-metrics:v2.4.2

- image: anjia0532/google-containers.kube-state-metrics.kube-state-metrics:v2.4.2

livenessProbe:

httpGet:

path: /healthz

port: 8080

initialDelaySeconds: 5

timeoutSeconds: 5

name: kube-state-metrics

ports:

- containerPort: 8080

name: http-metrics

- containerPort: 8081

name: telemetry

readinessProbe:

httpGet:

path: /

port: 8081

initialDelaySeconds: 5

timeoutSeconds: 5

securityContext:

allowPrivilegeEscalation: false

capabilities:

drop:

- ALL

readOnlyRootFilesystem: true

runAsUser: 65534

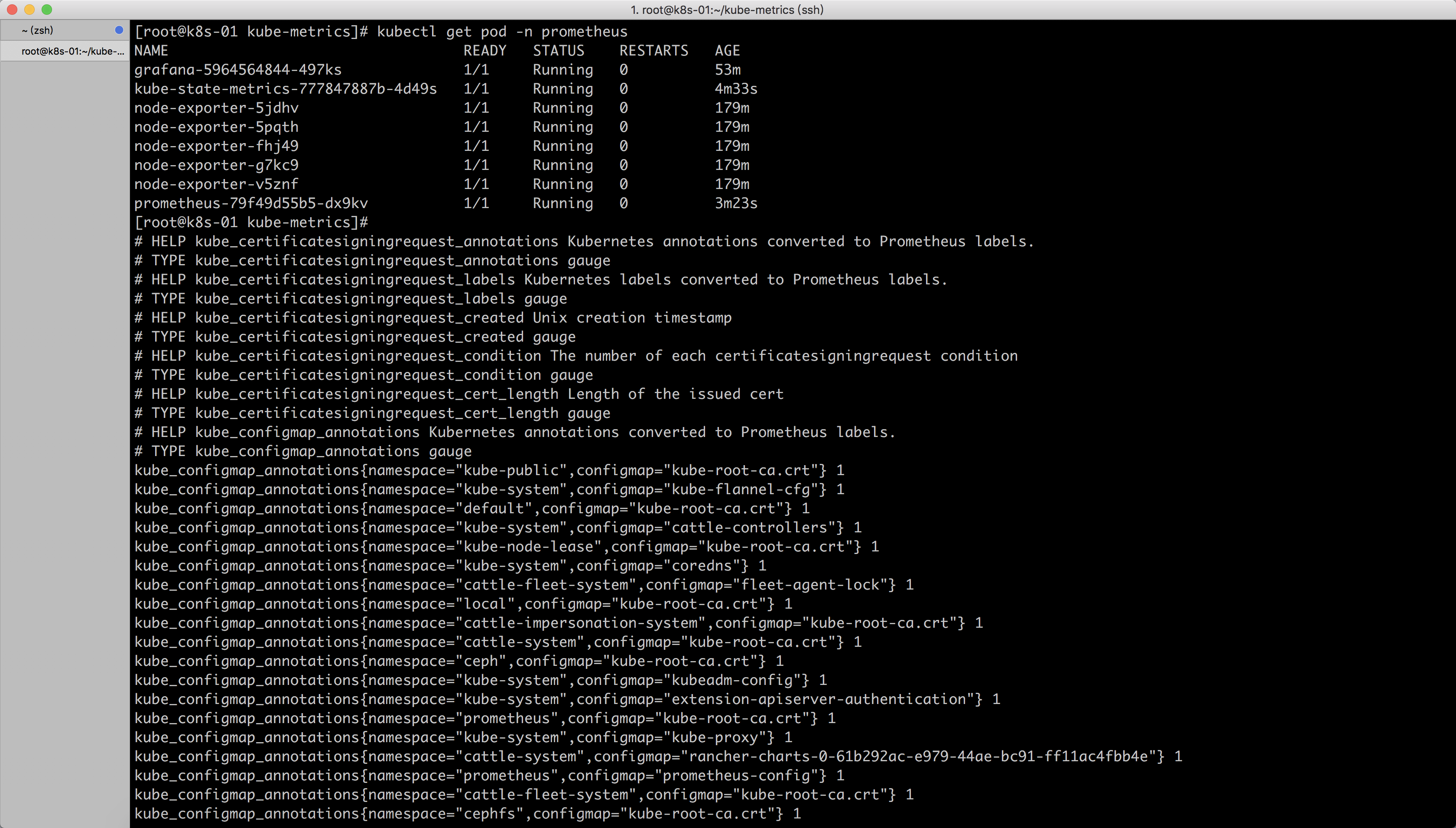

serviceAccountName: kube-state-metrics创建完成后检查pod状态

[root@k8s-01 kube-metrics]# kubectl get pod -n prometheus

NAME READY STATUS RESTARTS AGE

grafana-5964564844-497ks 1/1 Running 0 53m

kube-state-metrics-777847887b-4d49s 1/1 Running 0 4m33s

node-exporter-5jdhv 1/1 Running 0 179m

node-exporter-5pqth 1/1 Running 0 179m

node-exporter-fhj49 1/1 Running 0 179m

node-exporter-g7kc9 1/1 Running 0 179m

node-exporter-v5znf 1/1 Running 0 179m

prometheus-79f49d55b5-dx9kv 1/1 Running 0 3m23s检查svc是否有metric数据

Grafana

接下来安装Grafana,同样也是基于原来的文章,只不过将Grafana做持久化存储

[root@k8s-01 grafana]# cat grafana-pvc.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: grafana-data

namespace: prometheus

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 50Gi

storageClassName: csi-rbd-sc创建完pvc后,我们创建deployment和svc

[root@k8s-01 grafana]# cat grafana-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: grafana

namespace: prometheus

labels:

app: grafana

spec:

type: NodePort

ports:

- port: 3000

selector:

app: grafana

#Deployment

[root@k8s-01 grafana]# cat grafana-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: grafana

namespace: prometheus

labels:

app: grafana

spec:

revisionHistoryLimit: 10

selector:

matchLabels:

app: grafana

template:

metadata:

labels:

app: grafana

spec:

containers:

- name: grafana

image: grafana/grafana

imagePullPolicy: IfNotPresent

ports:

- containerPort: 3000

name: grafana

env:

- name: GF_SECURITY_ADMIN_USER

value: admin

- name: GF_SECURITY_ADMIN_PASSWORD

value: abcdocker

readinessProbe:

failureThreshold: 10

httpGet:

path: /api/health

port: 3000

scheme: HTTP

initialDelaySeconds: 60

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 30

livenessProbe:

failureThreshold: 3

httpGet:

path: /api/health

port: 3000

scheme: HTTP

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

resources:

limits:

cpu: 300m

memory: 1024Mi

requests:

cpu: 300m

memory: 1024Mi

volumeMounts:

- mountPath: /var/lib/grafana

subPath: grafana

name: storage

securityContext:

fsGroup: 472

runAsUser: 472

volumes:

- name: storage

persistentVolumeClaim:

claimName: grafana-data创建grafana所有yaml

[root@k8s-01 grafana]# kubectl apply -f .检查grafana

[root@k8s-01 grafana]# kubectl get all -n prometheus |grep grafana

pod/grafana-5964564844-497ks 1/1 Running 0 85m

service/grafana NodePort 10.110.65.126 <none> 3000:31923/TCP 3h5m

deployment.apps/grafana 1/1 1 1 3h12m

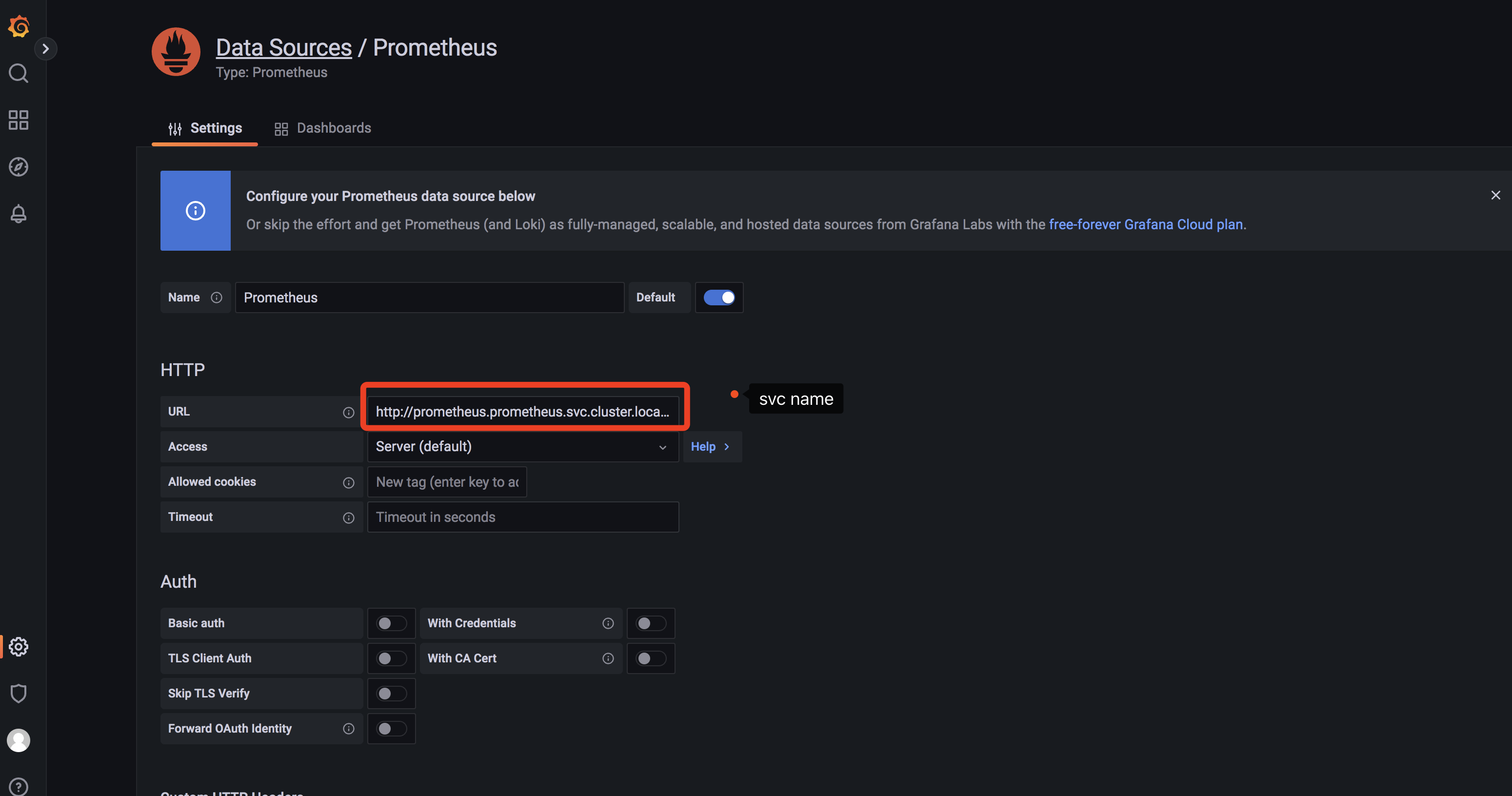

replicaset.apps/grafana-5964564844 1 1 1 3h12m配置grafana

这里grafana只讲几个重点配置部分

- 设置Prometheus源

http://prometheus.prometheus.svc.cluster.local:9090

这里的需要修改中间的prometheus,这个prometheus为namespace。不懂的看一下svc这块知识

- 推荐K8s监控模板

https://grafana.com/grafana/dashboards/15661

ID: 15661

效果图

https://grafana.com/grafana/dashboards/6417

ID: 6417

数据不会更新

[…] […]